Interview Question: Can You Explain the Lifecycle and Scheduling of a Goroutine?

Key Takeaways

- Go Scheduler Components: G (Goroutine), M (Machine), P (Processor)

- M:N Scheduler: Maps M goroutines to N OS threads, using

GOMAXPROCSthreads simultaneously. - Queues in Scheduler: Global Queue (checked occasionally) and Local Run Queues (per P).

- Work Stealing: P with an empty queue steals tasks from another P.

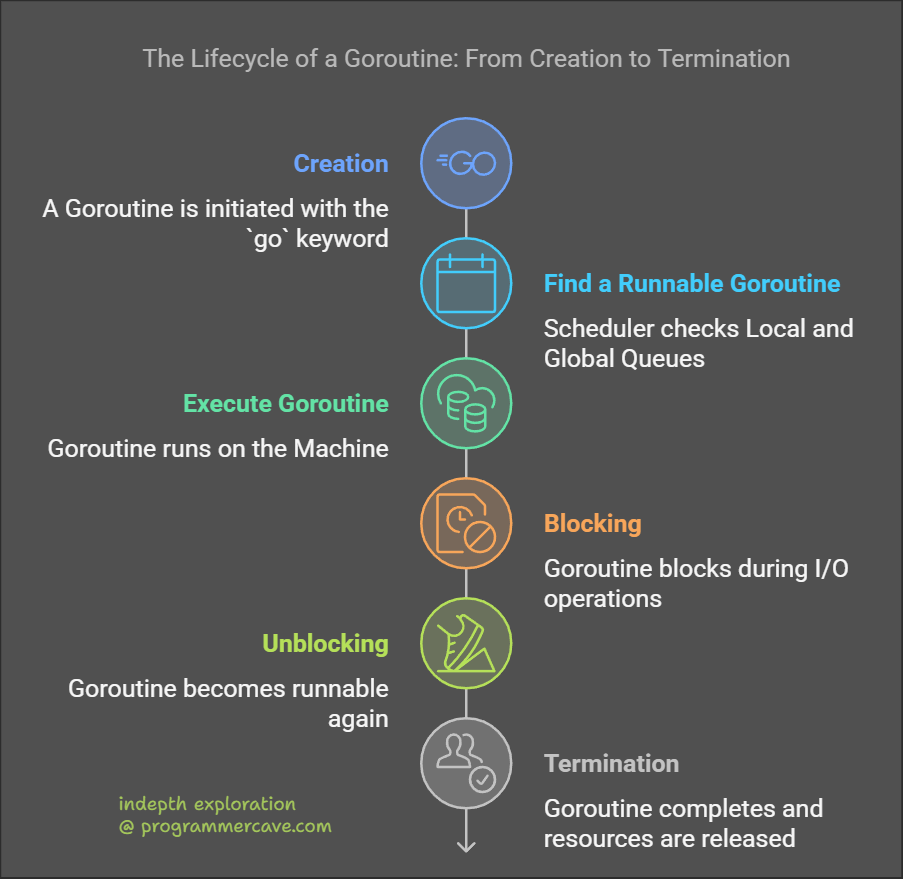

- Goroutine Lifecycle: Creation, Execution, Blocking, Unblocking, Termination.

- Scheduling Algorithm: Checks Local Queue → Global Queue → Work Stealing → Executes goroutine.

1. Key Components in Go Scheduler

-

G (Goroutine):

- In Go, a Goroutine is a lightweight thread of execution managed by the Go runtime, allowing concurrent execution of functions with minimal overhead.

-

M (Machine):

- An M, or Machine, represents an OS thread in Go, responsible for executing Goroutines. It interacts with the operating system’s scheduler to manage low-level thread operations.

-

P (Processor):

- A P, or Processor, is a logical entity in Go that manages Goroutines’ execution and schedules them onto available Machines (M). It keeps track of Goroutines in its local run queue.

2. Go Scheduler Architecture

-

M:N Scheduler:

- The Go scheduler uses an M:N model that maps M Goroutines to N OS threads, optimizing concurrency and resource utilization. It leverages the

GOMAXPROCSvariable to limit the number of threads executing simultaneously.

- The Go scheduler uses an M:N model that maps M Goroutines to N OS threads, optimizing concurrency and resource utilization. It leverages the

-

Global Queue and Local Run Queues:

- Global Queue: The Global Queue stores Goroutines that are waiting to be executed but are not yet assigned to any specific Processor. To maintain performance, it is checked occasionally (about once every 61 cycles) to avoid becoming a bottleneck.

- Local Run Queues: Each Processor (P) maintains its own Local Run Queue to store runnable Goroutines. Processors prioritize executing tasks from their own Local Queue to minimize latency and context switching.

-

Work Stealing:

- Work Stealing is an optimization strategy used in the Go Scheduler to balance workloads across Processors. If a Processor’s Local Queue is empty, it will steal tasks from another Processor’s queue, ensuring efficient distribution of work.

3. Lifecycle of a Goroutine

-

Creation:

- A Goroutine is created using the

gokeyword, which initiates a new concurrent execution of a function. It is then added to the Local Run Queue of the Processor (P) associated with the current Machine (M).

- A Goroutine is created using the

-

Execution:

- Once scheduled, the Goroutine is executed on an available Machine (M) by the Processor (P). It runs until it completes its task, becomes blocked, or is preempted by the Scheduler.

-

Blocking:

- A Goroutine becomes blocked when it performs operations that cannot be completed immediately, such as Network I/O, File I/O, or waiting on a Channel. When this happens, the Machine (M) is detached from the Processor (P) and parked, allowing the Processor to execute other Goroutines.

-

Unblocking:

- After the blocking operation is completed, the Goroutine becomes runnable again. It is then added back to either the Local Run Queue or the Global Queue, depending on the scheduling strategy.

-

Termination:

- A Goroutine terminates when its function completes execution. At this point, its resources are released, and it is removed from the Scheduler’s queues.

4. Scheduling Algorithm

-

Find a Runnable Goroutine:

- The Scheduler first checks the Local Run Queue of the current Processor (P) for runnable Goroutines. If no runnable Goroutines are found, it checks the Global Queue, although this happens only 1/61 times to maintain efficiency and prevent bottlenecks. If both queues are empty, the Scheduler uses Work Stealing to borrow tasks from another Processor’s queue.

-

Execute Goroutine:

- Once a runnable Goroutine is found, it is executed on the associated Machine (M). The Goroutine runs either to completion, until it blocks, or until it is preempted by the Scheduler to ensure fair CPU time allocation.

-

Handle Idle and Spinning Threads:

- To optimize CPU usage, the Scheduler manages idle and spinning threads carefully. Spinning threads actively look for work to minimize latency, while idle threads are parked to save CPU cycles when no work is available. This balance improves both performance and power efficiency.

5. Work Sharing vs. Work Stealing

-

Work Sharing:

- In Work Sharing, tasks are proactively distributed among Processors to balance the workload. This approach involves actively passing tasks to other Processors, ensuring even distribution.

-

Work Stealing:

- In Work Stealing, idle Processors actively take tasks from busy Processors’ queues. This reactive approach improves efficiency by dynamically balancing the workload based on demand.

-

Go uses Work Stealing

- Since version 1.1, Go uses Work Stealing as its primary strategy for load balancing. This method efficiently distributes tasks among Processors, reducing idle time and enhancing concurrency performance.

6. Optimizations in the Scheduler

-

Minimized Handoff:

- The Go Scheduler minimizes the handoff of Goroutines between Processors and Machines to reduce context-switching overhead. It keeps the same Processor-Machine pairing as long as possible, enhancing cache locality and performance. Handoff occurs only when necessary, such as during system calls or blocking operations.

-

Spinning Threads:

- To reduce latency, the Scheduler allows a limited number of spinning threads to remain active while waiting for work. Spinning threads avoid the overhead of sleeping and waking up by actively polling for runnable Goroutines. The number of spinning threads is capped at the value of

GOMAXPROCS, balancing power consumption and responsiveness.

- To reduce latency, the Scheduler allows a limited number of spinning threads to remain active while waiting for work. Spinning threads avoid the overhead of sleeping and waking up by actively polling for runnable Goroutines. The number of spinning threads is capped at the value of

Diagram: Go Scheduler Architecture

Goroutine Lifecycle:

+----------------+ +-----------------+ +----------------+

| Created | ----> | Runnable | ----> | Executed |

+----------------+ +-----------------+ +----------------+

^ | |

| v v

+--------------+ +----------------+

| Blocked | <-----> | Unblocked |

+--------------+ +----------------+

|

v

+----------------+

| Terminated |

+----------------+

Scheduler Workflow:

1. Check Local Run Queue --> Global Queue (1/61 time) --> Steal from others

2. Execute Goroutine

3. Handle Block/Unblock

Processor P Management:

+-----------+ +-----------+ +-----------+

| P1: Q1 | <---> | P2: Q2 | <---> | P3: Q3 |

+-----------+ +-----------+ +-----------+

Conclusion

The Go Scheduler is a powerful and efficient concurrency management system that seamlessly coordinates Goroutines, Machines, and Processors. Its M:N scheduling model allows Go to achieve high levels of concurrency with minimal overhead, making it ideal for building scalable and responsive applications. By leveraging Local Run Queues, Work Stealing, and optimized handling of blocking operations, the Go Scheduler maximizes CPU utilization while minimizing latency and context-switching costs. These design choices make Go a robust choice for concurrent programming, enabling developers to build high-performance systems with ease.