Interview Question: Explain Caching

Caching: The Secret Sauce for High-Performance Systems

If you’re preparing for a software engineering interview, understanding caching is non-negotiable. Caching is a cornerstone of system design, optimizing performance by storing frequently accessed data in fast-access layers. Let’s break it down in simple terms.

What is Caching?

Caching stores copies of data in temporary, high-speed storage (like RAM) to reduce access times. Think of it like keeping your favorite snacks on your desk instead of walking to the kitchen every time you’re hungry—faster access, less effort.

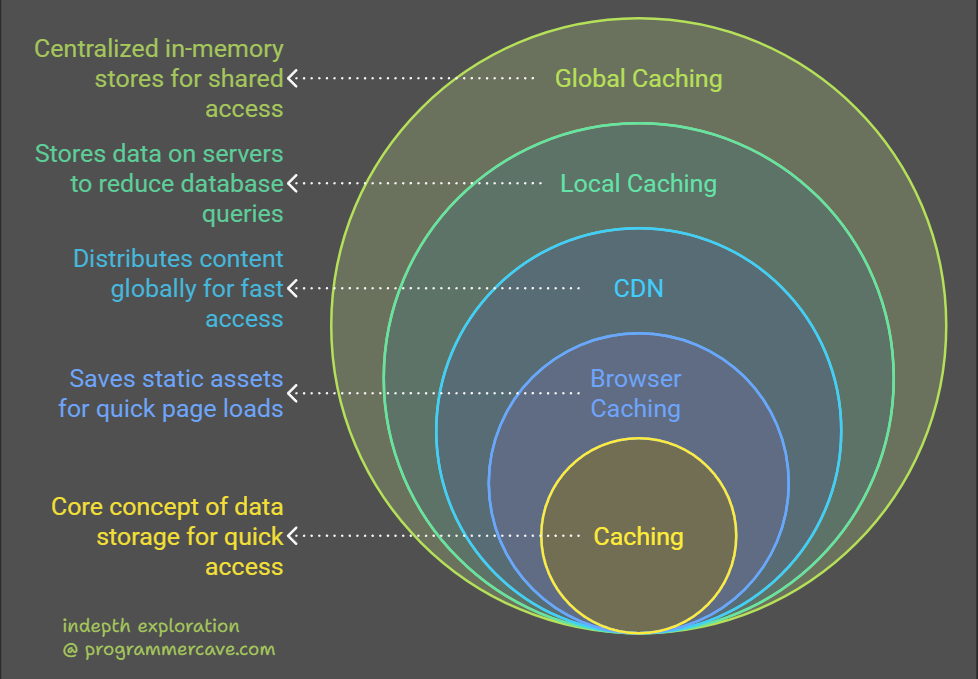

Levels of Caching

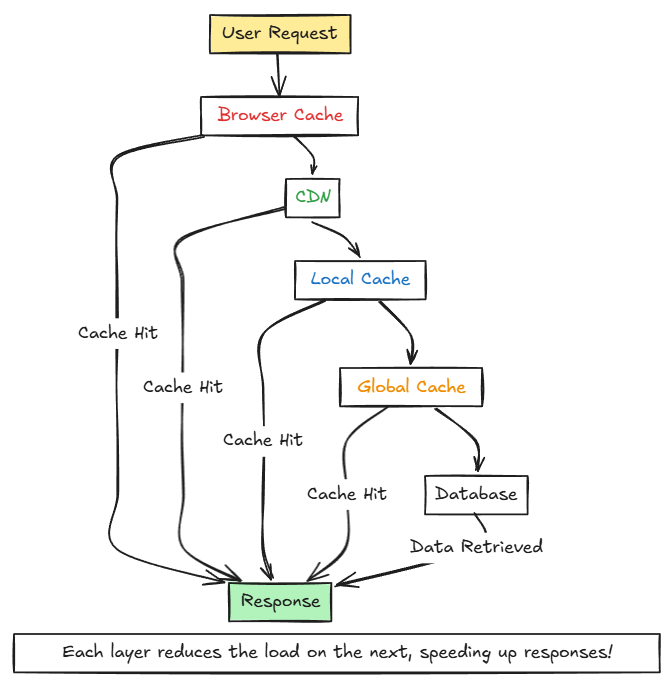

Caching operates at multiple levels to balance speed and resource usage:

1. Browser Caching

- What it does: Saves static assets (images, CSS, JavaScript) and DNS entries locally.

- Why it matters: Faster page loads on revisits (e.g., your Facebook profile loads quickly after the first visit).

2. Content Delivery Network (CDN)

- What it does: Distutes static content (videos, images) across global servers.

- Examples: Cloudflare, Amazon CloudFront.

- How it works: Uses Anycast routing to direct users to the nearest server. Imagine Netflix streaming from a server in your city instead of another continent!

3. Local Caching

- What it does: Stores data directly on the application server to avoid repeated database queries.

- Example: An e-commerce app caching product details for quick access during a sale.

4. Global Caching

- What it does: Uses centralized in-memory stores like Redis or Memcached for shared access across servers.

- Use case: Storing session data for a social media app’s millions of users.

Cache Challenges (And How to Solve Them)

Challenge 1: Data Staleness

Cached data can become outdated if the database updates.

Solutions:

- TTL (Time to Live): Set an expiration time for cached data (e.g., delete cached tweets after 1 hour).

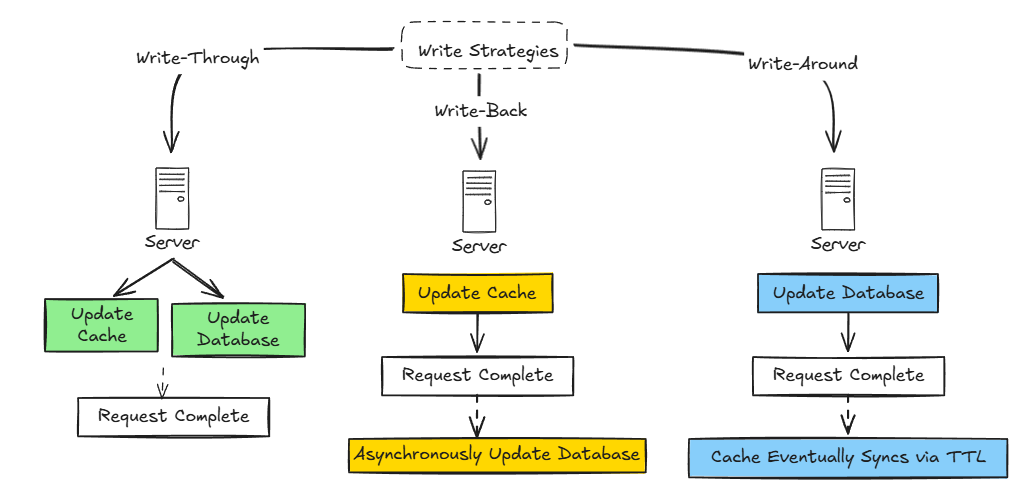

- Write Strategies:

- Write-Through: Update cache and database simultaneously (great for consistency).

- Write-Back: Update cache first, sync with the database later (risk of data loss but faster).

- Write-Around: Bypass cache and update the database directly (cache updates via TTL).

Challenge 2: Limited Cache Size

Caches have finite space. When full, old data must be evicted.

Eviction Strategies:

| Strategy | How It Works | Example Use Case |

|---|---|---|

| FIFO | Removes oldest entries | Logging systems |

| LRU | Removes least recently used | Social media feeds |

| MRU | Removes most recently used | News apps |

| LIFO | Removes newest entries | Undo/redo stacks |

Real-World Examples

- CDN in Action: When you watch a YouTube video, it’s fetched from a server near you, not from YouTube’s main data center.

- Redis for Sessions: Twitter uses Redis to store user sessions, enabling quick logins across devices.

Interview Tips

- Memorize the Levels: Browser → CDN → Local → Global.

- Compare Write Strategies: Use analogies like “write-through is like taking notes in pen; write-back is like using a pencil.”

- Discuss Trade-offs: For example, LRU vs. MRU depends on the data access pattern.

Diagram: How Caching Fits Into a System

Conclusion

Caching is a must-know for optimizing performance in scalable systems. By mastering levels, challenges, and strategies like TTL and LRU, you’ll stand out in interviews. Practice explaining these concepts with real-world examples, and you’ll be interview-ready!

Need a quick recap?

- Levels: Browser, CDN, Local, Global.

- Challenges: Staleness (solve with TTL), Size (solve with eviction).

- Write Strategies: Through, Back, Around.