When to Use sync.Mutex vs. Channels in Go?

Key Takeaways

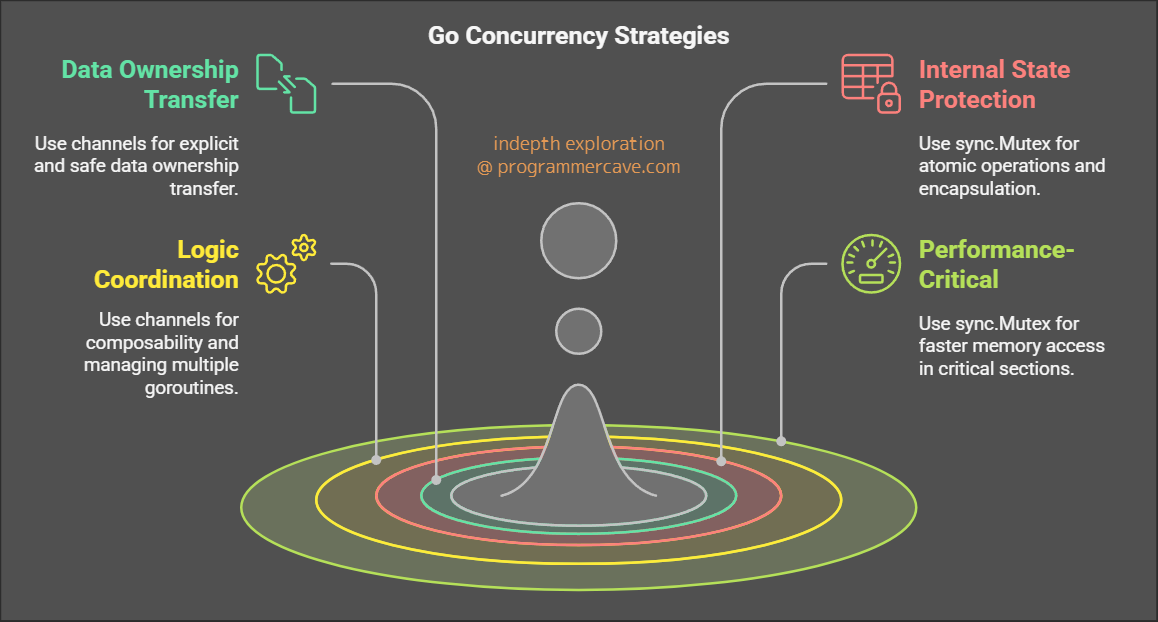

- Use

sync.Mutexfor protecting internal state and ensuring atomic operations. - Use Channels for transferring data ownership and coordinating multiple concurrent tasks.

- Channels improve code readability and safety by avoiding race conditions and deadlocks.

- Mutexes are faster for memory access but should be used sparingly and in performance-critical sections only after profiling.

- Go’s Concurrency Philosophy: Favor simplicity, use channels for communication, and don’t hesitate to use multiple goroutines.

Introduction

Concurrency in Go is a powerful feature that allows you to write highly efficient and scalable applications. Go provides two primary mechanisms for managing concurrency: sync.Mutex for memory synchronization and channels for communication between goroutines. Understanding when to use each is crucial for writing effective concurrent programs.

1. Are You Transferring Ownership of Data?

Definition

Transferring ownership means sharing data produced by one part of the code with another, ensuring that only one concurrent context owns the data at a time.

Solution: Use Channels

- Explicit Ownership: Channels make the transfer of ownership explicit and safe. When you send data through a channel, you are clearly indicating that the receiving goroutine is now responsible for that data.

- Type Safety: Channels are strongly typed, ensuring that only data of a specific type is transferred, which enhances type safety and prevents runtime errors.

- Buffered Queues: Channels can be buffered, allowing you to decouple producers from consumers, which increases flexibility.

- Composability: Channels are inherently more composable, making it easier to build complex concurrent workflows by connecting multiple channels.

Example

ch := make(chan int, 10) // Buffered channel

// Producer

go func() {

for i := 0; i < 10; i++ {

ch <- i // Sending data

}

close(ch) // Closing channel after sending all data

}()

// Consumer

for val := range ch {

fmt.Println(val) // Receiving data

}

2. Are You Guarding Internal State of a Struct?

Scenario

When protecting the internal state of a struct or critical sections of code.

Solution: Use sync.Mutex

- Atomic Operations: Locks ensure that operations on shared data are atomic, preventing race conditions.

- Encapsulation: Mutexes encapsulate complexity, maintaining a simple interface for users of the struct.

Example

type Counter struct {

mu sync.Mutex

value int

}

func (c *Counter) Increment() {

c.mu.Lock() // Lock before accessing shared state

defer c.mu.Unlock() // Unlock after access

c.value++

}

Best Practice

- Keep Locks Small: Keep the scope of locks as small as possible to minimize contention.

- Avoid Exposing Locks: Do not expose locks outside the struct to maintain encapsulation.

3. Are You Coordinating Multiple Pieces of Logic?

Challenge

Composing multiple concurrent operations in a clean and maintainable way.

Solution: Use Channels

- Composability: Channels are inherently more composable, supporting communication between multiple goroutines. This allows you to break down complex logic into simpler, isolated components that are easier to manage and reason about.

- Select Statement: Go’s

selectstatement helps manage complex concurrency patterns by listening to multiple channels.

Understanding the select Statement in Go

Example

ch1 := make(chan string)

ch2 := make(chan string)

select {

case msg1 := <-ch1:

fmt.Println("Received from ch1:", msg1)

case msg2 := <-ch2:

fmt.Println("Received from ch2:", msg2)

default:

fmt.Println("No data received")

}

4. Is It a Performance-Critical Section?

Clarification

Channels involve internal synchronization, which can be slower than direct memory access using sync.Mutex.When data is sent or received through a channel, Go manages synchronization behind the scenes to ensure safe communication between goroutines. This involves blocking and waking up goroutines, which adds overhead compared to the more straightforward locking and unlocking mechanism of sync.Mutex. Therefore, in performance-critical sections where memory access speed is crucial, sync.Mutex might be a better choice.

Approach

- Profile First: Profile your code to identify bottlenecks before optimizing.

- Use

sync.MutexSparingly: Usesync.Mutexin performance-critical sections only when necessary.

Example

var counter int

var mu sync.Mutex

func increment() {

mu.Lock()

counter++

mu.Unlock()

}

Go’s Concurrency Philosophy

- Favor Simplicity: Prefer channels over mutexes for readability and maintainability.

- Use Channels for Communication: Channels naturally model concurrent workflows and improve code safety.

- Don’t Fear Starting Too Many Goroutines: Go’s lightweight goroutines allow extensive concurrency without significant overhead.

Conclusion

In Go, choosing between sync.Mutex and channels depends on the specific requirements of your concurrent program.

-

Use

sync.Mutexwhen you need to protect the internal state of a struct or ensure atomic operations. Mutexes are faster for memory access and should be used in performance-critical sections, but they should be kept to a small scope to minimize contention and maintain simplicity. -

Use Channels when you need to transfer data ownership, coordinate multiple concurrent tasks, or build complex workflows. Channels improve code readability and safety by avoiding race conditions and deadlocks. They are inherently more composable and support communication between multiple goroutines, making them ideal for most concurrency scenarios.

-

Profile Before Optimizing: Always profile your code to identify bottlenecks before deciding to optimize with

sync.Mutex. Channels are generally preferred for their simplicity and maintainability.

By following Go’s concurrency philosophy—favoring simplicity, using channels for communication, and not hesitating to use multiple goroutines—you can write efficient, maintainable, and robust concurrent programs.